Summary:

In a previous blog, we shared how Microsoft Teams uses AI to remove distracting background noise from meetings and calls. This has been incredibly helpful in remote and mobile work settings, where users don’t always have full control over their environment.

Today, we want to spotlight new machine learning (ML) and AI-based features in Microsoft Teams that dramatically improve the sound quality of meetings and calls, even in the most challenging situations.

We have recently extended our machine learning (ML) model to prevent unwanted echo – a welcome addition for anyone who has had their train of thought derailed by the sound of their own words coming back at them.

This model goes a step further to improve dialogue over Teams by enabling “full duplex” sound. Now, users are able to speak and listen at the same time, allowing for interruptions that make the conversation seem more natural and less choppy.

Lastly, Teams uses AI to reduce reverberation, improving the quality of audio from users in rooms with poor acoustics. Now, users can sound as if they’re speaking into a headset microphone, even when they’re in a large room where speech and other noise can bounce from wall to wall. Audio in these challenging settings now sound no different compared to conversations from the office.

The demo below shows how the new ML model improves the Teams meeting experience.

Teams optimizes audio for echo, interruptability and reverberation to improve the call experience

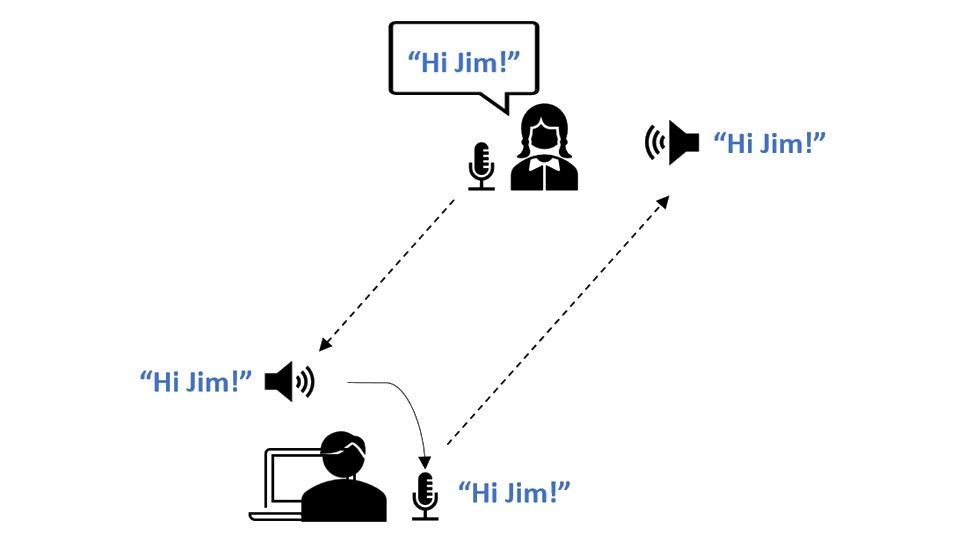

Echo is a common audio affect that can negatively impact online meetings when one of the participants is not using a headset and the signal from their loudspeaker gets captured by their microphone. This results in the person on the other end of the call hearing their own voice, which creates an echo effect:

The tasks of an echo cancellation module are to recognize when the sound from loudspeaker gets into the microphone and then remove it from the outbound audio. In many device setups, the speaker is closer to the microphone than the end user. In these situations, the echo signal comes in more loudly than the end user’s voice making it challenging to remove the echo without also suppressing the speech signal of the end user, particularly when both parties attempt to speak at the same time. Ultimately, when only one person can talk at a time, it’s difficult for users to interrupt remote attendees.

Teams’ AI-based approach to echo cancellation and interruptability addresses the shortcomings of traditional digital signal processing. We used data from thousands of devices to create a large dataset with approximately 30,000 hours of clean speech for training a model which can deal with extreme audio conditions in real-time.

In compliance with Microsoft’s strict privacy standards, no customer data is collected for this data set. Instead, we either used publicly available data or crowdsourcing to collect specific scenarios. We also ensured that we had a balance of female and male speech, as well as 74 different languages.

To avoid added complexity by running separate models for noise suppression and echo cancellation, we decided to combine the two using joint training. Now our all-in-one model runs 10% faster than “noise-suppression-only” without quality trade-offs.

In addition, we modified our training data to address reverberation, enabling the ML model to convert any captured audio signal to sound similar to speaking into a close-range microphone. The dereverberation effect showcased in the video above is something traditional echo cancellers cannot do. Now, even if you’re having a meeting on a staircase while waiting for your kids to finish their swimming lesson, Teams enables your voice to sound as though you’re in the office.

We’re just getting started exploring the ways AI and machine learning can improve sound quality and enable the best calling and meeting experiences in Teams. To foster research in this area we have organized several competitions which bring together researchers and practitioners from all over the world with the latest one hosted earlier this year: Acoustic Echo Cancellation Challenge – ICASSP 2022 – Microsoft Research.

Echo cancellation and dereverberation are rolling out for Windows and Mac devices, and will be coming soon to mobile platforms.

Keep watching this blog to learn about new machine learning and AI-driven optimizations in Teams designed to improve the quality of your calls and meetings.

Date: 2022-06-13 13:00:00Z

Link: https://techcommunity.microsoft.com/t5/microsoft-teams-blog/new-ai-based-speech-enhancements-for-microsoft-teams/ba-p/3490168